Support Vector Machines

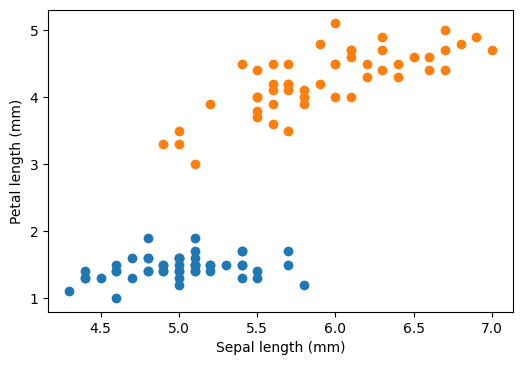

Support Vector Machine (SVM) is a technique used for binary classification. Let us consider a categorical data:

from sklearn import datasets

import matplotlib.pyplot as plt

%matplotlib inline

plt.rcParams["figure.dpi"] = 100

plt.rcParams["figure.facecolor"] = "white"

iris = datasets.load_iris()

data = iris.data

# data[:4, :]

# iris.feature_names

# iris.target

sepal_length = data[:, 0]

petal_length = data[:, 2]

# here we will pick two categories, the dataset has three differnt target values

plt.scatter(sepal_length[iris.target == 0], petal_length[iris.target == 0])

plt.scatter(sepal_length[iris.target == 1], petal_length[iris.target == 1])

plt.xlabel("Sepal length (mm)")

plt.ylabel("Petal length (mm)")

plt.show()

The question is: how can we draw a boundary (here a straight line in two dimensional plane) that separates two categorical data. On an -dimensional dataset, we will have a dimensional hyperplane separating the two categories.

where

Perceptron

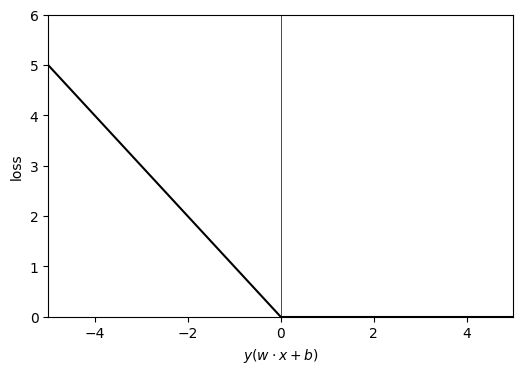

First, let us have a look at the perceptron algorithm. This algorithm finds a linear separator based on Stochastic Gradient Descent. Say we start with an arbitrary line: ( and are parameters), and say our labels . Our line should separate our training data points in two parts. The points on one side is and on the another . What could be a suitable loss function? Of course, there are many possibilities. One simple possibility could be, we calculate , if we are right (i.e., predicts the correct label or ), there is no loss. If we are wrong, we assign loss = .

Our loss function is convex. We reach for solution by starting with an arbitrary and , then we go through our data sets and calculate . If we are right, there is no loss and no update to our and . If we are wrong, we change and along the negative gradient (Stochastic Gradient Descent) by following amount:

where is the step size (learning rate), we could even consider it equal to 1 for simplicity.

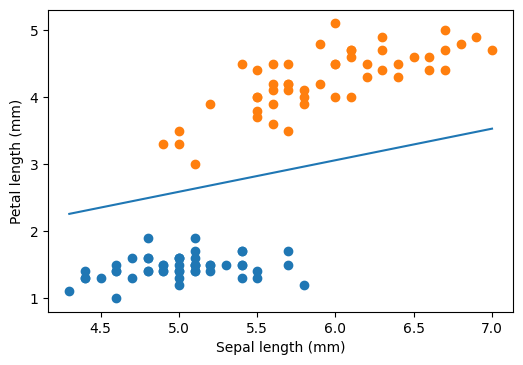

We follow this perceptron rule for all data points (called epoch), and start a new epoch by going through the data again. The data points are shuffled before each epoch. Once there are no more updates after going through all the data points, we have reached the solution. If the dataset is linearly separable, perceptron algorithm is guaranteed to find a solution. Notice that the learning process is stochastic, it will produce different outcome each time (albeit each solution will correctly classify our linearly separable dataset).

from sklearn.linear_model import Perceptron

model = Perceptron(random_state=np.random.randint(100))

# choose the data only for category 0 and 1 (i.e., < 2)

# here tile creates 4 columns out of single boolean column

# later reshape is necessary as it returns a one dimensional array

data_copy = np.reshape(data[np.tile(np.array([iris.target < 2]).transpose(), \

(1, 4))], (-1, 4))

# choose only sepal length and petal length cols

X = np.array([data_copy[:, 0], data_copy[:, 2]]).T

y = iris.target[iris.target < 2]

model.fit(X, y)

# get the separating hyperplane

w = model.coef_[0]

a = -w[0] / w[1]

xx = np.linspace(min(X[:, 0]), max(X[:, 0]))

yy = a * xx - (model.intercept_[0]) / w[1]

plt.scatter(sepal_length[iris.target == 0], petal_length[iris.target == 0])

plt.scatter(sepal_length[iris.target == 1], petal_length[iris.target == 1])

plt.plot(xx, yy)

plt.xlabel("Sepal length (mm)")

plt.ylabel("Petal length (mm)")

plt.show()

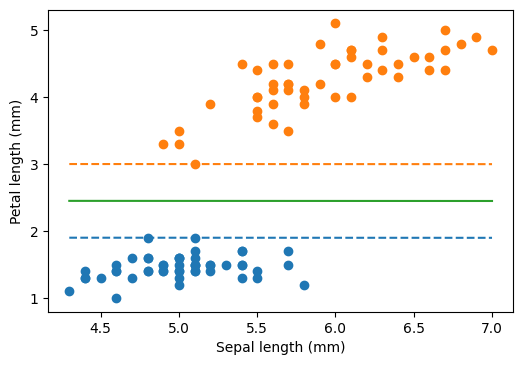

Hard margin

We can see that there are many possible straight lines that perfectly separates our training data points. Then which one to choose? We will pick the one which will have maximum margin. Margin is the distance between the separator line and nearest points on each side. The separator depends only on the points over the margins, and hence the naming support vectors.

from sklearn.svm import SVC

model = SVC(kernel='linear', C=1000)

model.fit(X, y)

# get the separating hyperplane

w = model.coef_[0]

a = -w[0] / w[1]

xx = np.linspace(min(X[:, 0]), max(X[:, 0]))

yy = a * xx - (model.intercept_[0]) / w[1]

# plot the parallels to the separating hyperplane that pass through the

# support vectors (margin away from hyperplane in direction

# perpendicular to hyperplane). This is sqrt(1+a^2) away vertically in

# 2-d.

margin = 1 / np.sqrt(np.sum(model.coef_ ** 2))

yy_down = yy - np.sqrt(1 + a ** 2) * margin

yy_up = yy + np.sqrt(1 + a ** 2) * margin

plt.scatter(sepal_length[iris.target == 0], petal_length[iris.target == 0])

plt.scatter(sepal_length[iris.target == 1], petal_length[iris.target == 1])

plt.plot(xx, yy_down, "--")

plt.plot(xx, yy_up, "--")

plt.plot(xx, yy)

plt.xlabel("Sepal length (mm)")

plt.ylabel("Petal length (mm)")

plt.show()

Soft margin

What happens when the data is not linearly separable? Or even we might want to maximize the margin by giving up some data point (which might well be outliers)?

Kernel methods

How do we approach the problem when the data does not represent classes with linear boundary? One of the methods is to expand the data into a higher order space, and then use the linear boundary methods such as SVM. For example, in case of quadratic boundary, we can expand extra basis vectors such as , ,..., , , ... along with feature space the , , ... In such a higher dimension, the boundary is linear (though it is quadratic or higher order polynomial in actual feature space).