High Performance Computing

In order to perform computationally heavy calculations, we would require access to high performance computing facilities.

Useful UNIX commands

Connect to a login node via ssh:

ssh {username}@atlas9.nus.edu.sg

Secure copy files between local and remote machines:

scp {username}@10.10.0.2:/remote/file.txt /local/directory

scp local/file.txt {username}@10.10.0.2:/remote/directory

Check disk usage:

du -hs .

du -hs /path/

Rsync to synchronize two folders:

rsync -azhv --delete /source/my_project/ /destination/my_project

Running jobs at NUS HPC

Check your storage quota:

hpc s

PBS commands:

hpc pbs summary

Example scrips for job submissions:

hpc pbs script parallel20

hpc pbs vasp

List available modules:

module avail

Load a module:

module load {module-name}

Purge loaded modules:

module purge

Quantum Espresso is already installed in NUS HPC clusters. Here is a sample job script for NUS HPC clusters:

#!/bin/bash

#PBS -q parallel24

#PBS -l select=2:ncpus=24:mpiprocs=24:mem=96GB

#PBS -j eo

#PBS -N qe-project-xx

source /etc/profile.d/rec_modules.sh

module load espresso6.5-intel_18

## module load espresso6.5-Centos6_Intel

cd $PBS_O_WORKDIR;

np=$( cat ${PBS_NODEFILE} | wc -l );

mpirun -np $np -f ${PBS_NODEFILE} pw.x -inp qe-scf.in > qe-scf.out

Notice that the lines beginning with #PBS are actually PBS commands, not

comments. For comments, I am using ##.

Query about a queue system:

qstat -q

Check status of a particular queue system:

qstat -Qx parallel24

Submitting a job:

qsub pbs_job.sh

Check running jobs:

qstat

Details about a job:

qstat -f {job-id}

Stopping a job:

qdel {job-id}

Abort and restart a calculation

If you need to modify certain parameters while the program is running, e.g., you

want to change the mixing_beta value because SCF accuracy is oscillation

without any sign of convergence. Create an empty file named {prefix}.EXIT in

the directory where you have the input file or in the outdir as set in the

&CONTROL card of input file.

touch {prefix}.EXIT

That will stop the program on the next iteration, and save the state. In order

to restart, set the restart_mode in &CONTROL card to 'restart' and re-run

after necessary changes. You must re-submit the job with the same number of

processors.

&CONTROL

...

restart_mode = 'restart'

...

/

Compiling Quantum Espresso using Intel® Math Kernel Library (MKL)

If you need a newer or specific version of Quantum Espresso that is not installed in the NUS clusters or you have modified the source codes yourself, here are the steps that I followed to successfully compile.

Quantum Espresso project is primarily hosted on GitLab, and its mirror is maintained at GitHub. You may check their repository at GitLab for more up to date information. The releases via GitLab can be found under: https://gitlab.com/QEF/q-e/-/releases

Download and decompress the source files.

wget https://gitlab.com/QEF/q-e/-/archive/qe-7.2/q-e-qe-7.2.tar.gz

tar -zxvf q-e-qe-7.2.tar.gz

Load the necessary modules (applicable for NUS clusters, last checked in Jun 2022):

module load xe_2018

module load fftw/3.3.7

Go to QE directory and run configure:

cd q-e-qe-7.2

./configure

You will see output something like:

...

BLAS_LIBS= -lmkl_intel_lp64 -lmkl_sequential -lmkl_core

LAPACK_LIBS=

FFT_LIBS=

...

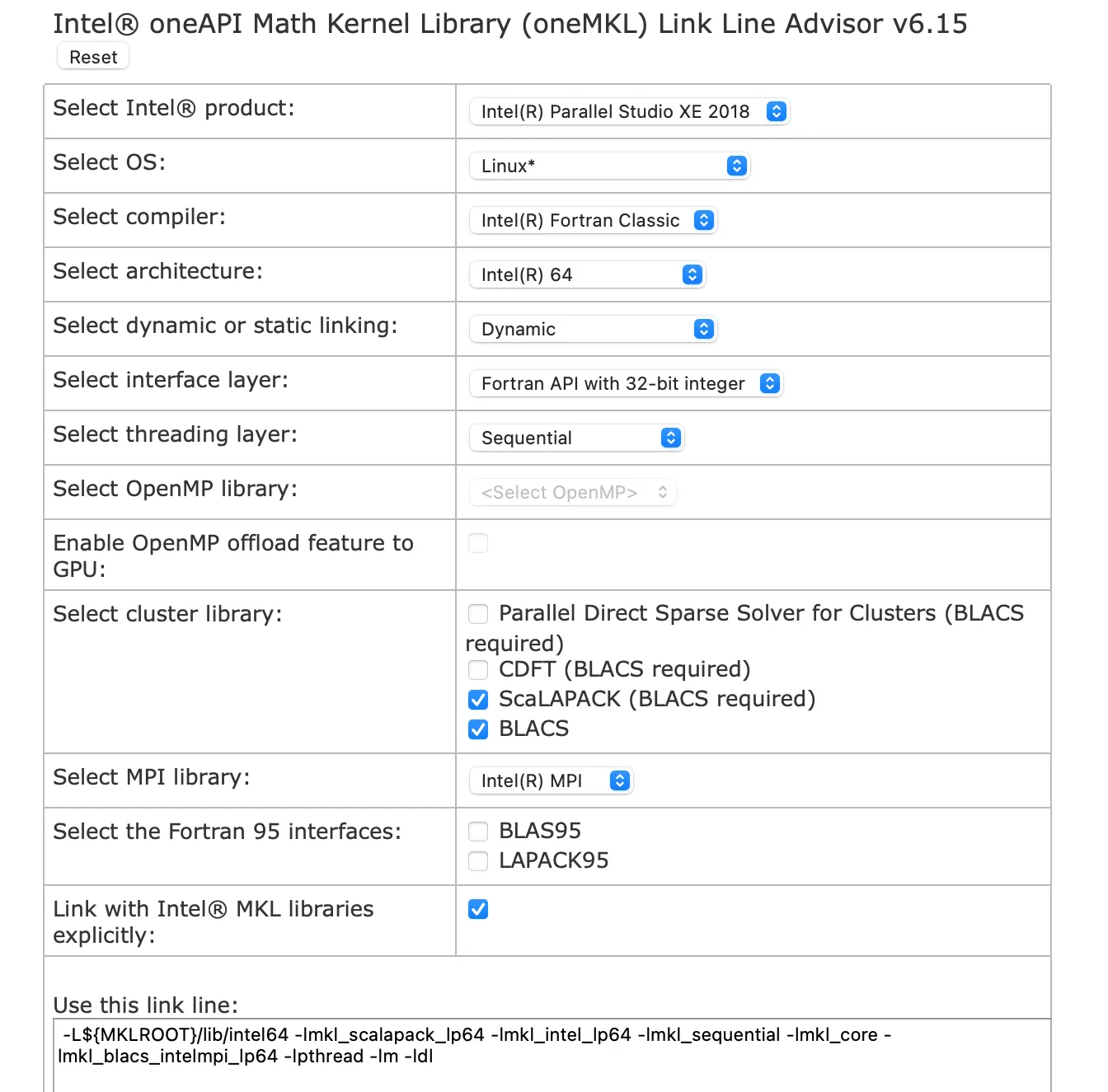

For me, the LAPACK_LIBS and FFT_LIBS libs were not automatically detected. We

need to specify them manually. First, get the link libraries line specific to

your version of MKL and other configurations from the Intel link advisor.

For my case, the link line was:

-L${MKLROOT}/lib/intel64 -lmkl_scalapack_lp64 -lmkl_intel_lp64 -lmkl_sequential -lmkl_core -lmkl_blacs_intelmpi_lp64 -lpthread -lm -ldl

We need to insert the link for BLAS_LIBS, LAPACK_LIBS, and SCALAPACK_LIBS.

We also need to find out where is the FFTW lib located. In NUS HPC, we can use

module avail command to see where a particular module is located, usually

under /app1/modules/. Open make.inc and make the following changes:

# ...

CFLAGS = -O2 $(DFLAGS) $(IFLAGS)

CFLAGS = -O3 $(DFLAGS) $(IFLAGS)

F90FLAGS = $(FFLAGS) -nomodule -fpp $(FDFLAGS) $(CUDA_F90FLAGS) $(IFLAGS) $(MODFLAGS)

# compiler flags with and without optimization for fortran-77

# the latter is NEEDED to properly compile dlamch.f, used by lapack

- FFLAGS = -O2 -assume byterecl -g -traceback

+ FFLAGS = -O3 -assume byterecl -g -traceback

FFLAGS_NOOPT = -O0 -assume byterecl -g -traceback

# ...

# If you have nothing better, use the local copy

# BLAS_LIBS = $(TOPDIR)/LAPACK/libblas.a

- BLAS_LIBS = -lmkl_intel_lp64 -lmkl_sequential -lmkl_core

+ BLAS_LIBS = -L${MKLROOT}/lib/intel64 -lmkl_scalapack_lp64 -lmkl_intel_lp64 -lmkl_sequential -lmkl_core -lmkl_blacs_intelmpi_lp64 -lpthread -lm -ldl

# If you have nothing better, use the local copy

# LAPACK = liblapack

# LAPACK_LIBS = $(TOPDIR)/external/lapack/liblapack.a

- LAPACK =

+ LAPACK = liblapack

- LAPACK_LIBS =

+ LAPACK_LIBS = -L${MKLROOT}/lib/intel64 -lmkl_scalapack_lp64 -lmkl_intel_lp64 -lmkl_sequential -lmkl_core -lmkl_blacs_intelmpi_lp64 -lpthread -lm -ldl

- SCALAPACK_LIBS =

+ SCALAPACK_LIBS = -L${MKLROOT}/lib/intel64 -lmkl_scalapack_lp64 -lmkl_intel_lp64 -lmkl_sequential -lmkl_core -lmkl_blacs_intelmpi_lp64 -lpthread -lm -ldl

# nothing is needed here if the internal copy of FFTW is compiled

# (needs -D__FFTW in DFLAGS)

- FFT_LIBS =

+ FFT_LIBS = -L/app1/centos6.3/gnu/fftw/3.3.7/lib/ -lmpi

# ...

Now we are ready to compile:

make -j8 all

I am parallelizing with 8 processors to speed things up. You may add the

q-e-qe-7.2/bin path to your .bashrc:

echo 'export PATH="/home/svu/{username}/q-e-qe-7.2/bin:$PATH"' >> ~/.bashrc

And don't forget to load dependencies before calling QE executables.

module load xe_2018

module load fftw/3.3.7

If you are submitting job via PBS queue, you need to provide full path of the QE

executables, e.g., /home/svu/{username}/q-e-qe-7.2/bin/pw.x. PBS system won't

read your bash settings, neither the relative paths of your login node would

apply.

Installing Intel oneAPI libraries

If you need to install Intel oneAPI libraries yourself, following instructions might be useful. Please refer to Intel website for up to date information.

Intel oneAPI Base Toolkit:

wget https://registrationcenter-download.intel.com/akdlm/IRC_NAS/992857b9-624c-45de-9701-f6445d845359/l_BaseKit_p_2023.2.0.49397_offline.sh

# requires gnu-awk

sudo apt update && sudo apt install -y --no-install-recommends gawk gcc g++

# interactive cli installation

sudo apt install -y --no-install-recommends ncurses-term

sudo sh ./l_BaseKit_p_2023.2.0.49397_offline.sh -a --cli

# list components included in oneAPI Base Toolkit

sh ./l_BaseKit_p_2023.2.0.49397_offline.sh -a --list-components

# install a subset of components with silent/unattended option

sudo sh ./l_BaseKit_p_2023.2.0.49397_offline.sh -a --silent --eula accept --components intel.oneapi.lin.dpcpp-cpp-compiler:intel.oneapi.lin.mkl.devel

If you install oneAPI without sudo privilege, it will be installed under the

user directory: /home/{username}/intel/oneapi/. After installation is

completed, the setup script will print the installation location.

HPC Toolkit

wget https://registrationcenter-download.intel.com/akdlm/IRC_NAS/0722521a-34b5-4c41-af3f-d5d14e88248d/l_HPCKit_p_2023.2.0.49440_offline.sh

sudo sh ./l_HPCKit_p_2023.2.0.49440_offline.sh -a --silent --eula accept

Intel MKL library

Installing individual components:

wget https://registrationcenter-download.intel.com/akdlm/IRC_NAS/adb8a02c-4ee7-4882-97d6-a524150da358/l_onemkl_p_2023.2.0.49497_offline.sh

sudo sh ./l_onemkl_p_2023.2.0.49497_offline.sh -a --silent --eula accept

After installation, do not forget to source the environment variables before

using:

source /opt/intel/oneapi/setvars.sh

Compile quantum espresso:

wget https://gitlab.com/QEF/q-e/-/archive/qe-7.2/q-e-qe-7.2.tar.gz

tar -zxvf q-e-qe-7.2.tar.gz

rm q-e-qe-7.2.tar.gz

cd q-e-qe-7.2

./configure \

F90=mpiifort \

MPIF90=mpiifort \

CC=mpicc CXX=icc \

F77=mpiifort \

FFLAGS="-O3 -assume byterecl -g -traceback" \

LAPACK_LIBS="-L${MKLROOT}/lib/intel64 -lmkl_scalapack_lp64 -lmkl_intel_lp64 -lmkl_sequential -lmkl_core -lmkl_blacs_intelmpi_lp64 -lpthread -lm -ldl" \

BLAS_LIBS="-L${MKLROOT}/lib/intel64 -lmkl_scalapack_lp64 -lmkl_intel_lp64 -lmkl_sequential -lmkl_core -lmkl_blacs_intelmpi_lp64 -lpthread -lm -ldl" \

SCALAPACK_LIBS="-L${MKLROOT}/lib/intel64 -lmkl_scalapack_lp64 -lmkl_intel_lp64 -lmkl_sequential -lmkl_core -lmkl_blacs_intelmpi_lp64 -lpthread -lm -ldl"

make -j4 all

Compiling Quantum Espresso with CMake

Please check out the official documentation for more

details. It requires cmake version 3.14 or later.

apt update && apt install autoconf cmake gawk gcc g++ make

I used following steps to successfully compile Quantum Espresso using 2023 versions of Intel libraries in Ubuntu 22.04 system:

cd q-e-qe-7.2

mkdir build && cd build

cmake -DCMAKE_C_COMPILER=mpiicc -DCMAKE_Fortran_COMPILER=mpiifort -DQE_ENABLE_SCALAPACK=ON ..

make -j4

mv bin ..

cd ..

rm -rf build